Google’s Programmable Search Engine, formerly known as the Custom Search Engine (or CSE), is a simple solution to integrate basic site search into a website. In just a few clicks, you can add an easy search box that more-or-less works for site-wide search.

The search box looks like this, complete with Google branding:

In addition, you can integrate this search with AdSense so that you get some ad revenue when someone clicks on an ad or sponsored link in the search results. It’s not much, only a tiny fraction of what you might get from other ads on your site, but it’s something.

You don’t have any control over how pages rank in search results.

Here’s an example of what the search results looked like for one of my sites:

If you don’t really care about the user experience or look and feel of your site and want to give away all of the valuable information that search data can tell you, then you’re done.

If, however, you care about the user experience for your site and want to make it better over time, you’re better off finding a creating a different solution.

If you’re using a framework or CMS like WordPress, there are plenty of built-in and plugin solutions that you can use that will let you see what people actually searched for.

Let’s compare two pieces of data.

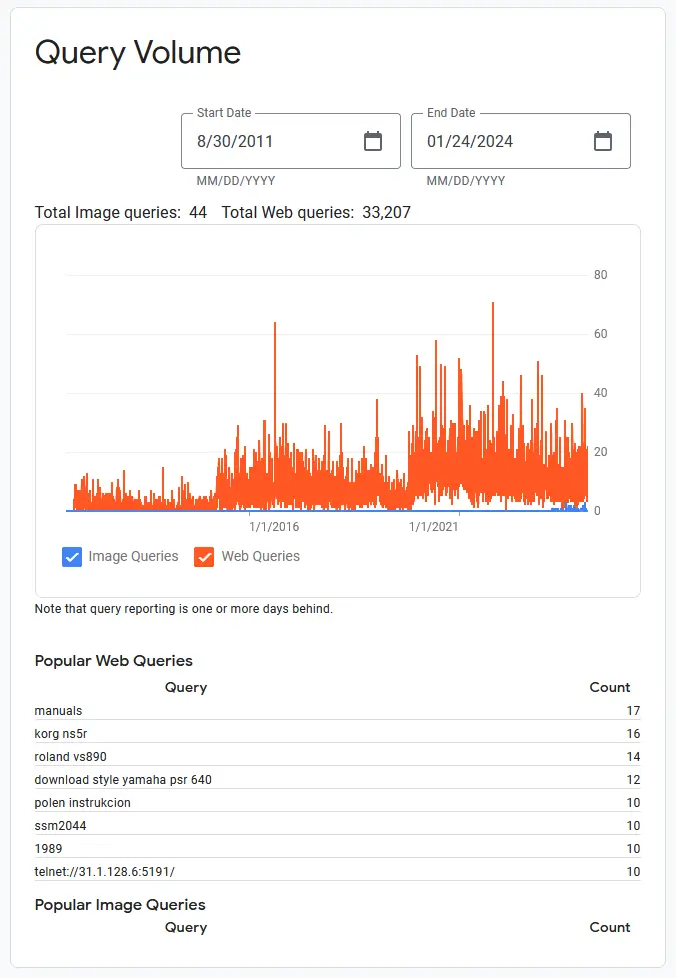

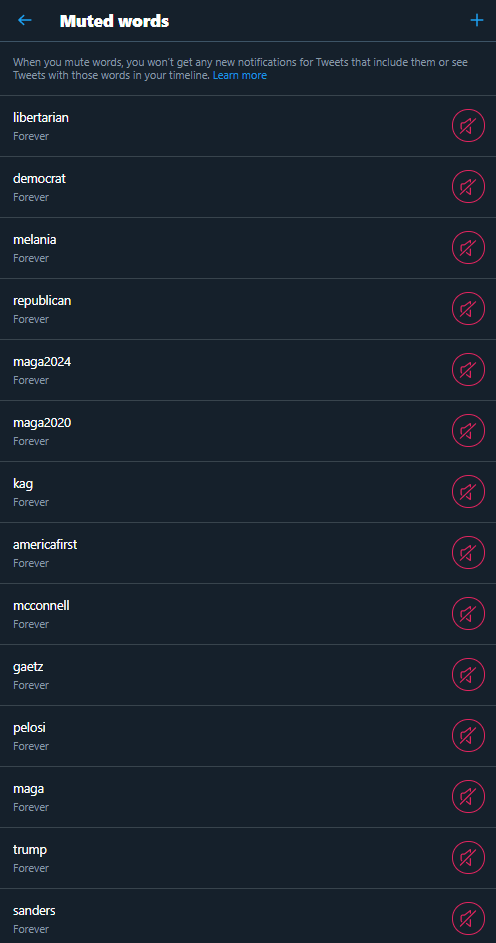

First, here is the google Programmable Search report for my website soundprogramming.net:

Note that there is not a lot of useful information here. Although you can see the text of queries that people made more than 10 times, that doesn’t tend to be a lot of information. You lose ALL unique search queries, uncommon variations, and are left with almost nothing that you can use to improve your site content or user experience. This is all I get from more than 30,000 queries over more than a decade!

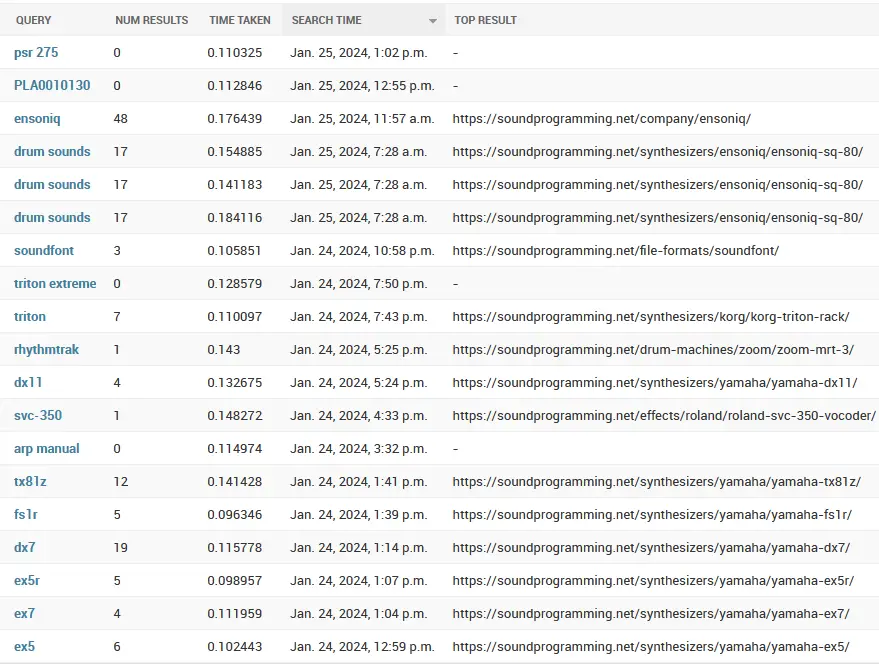

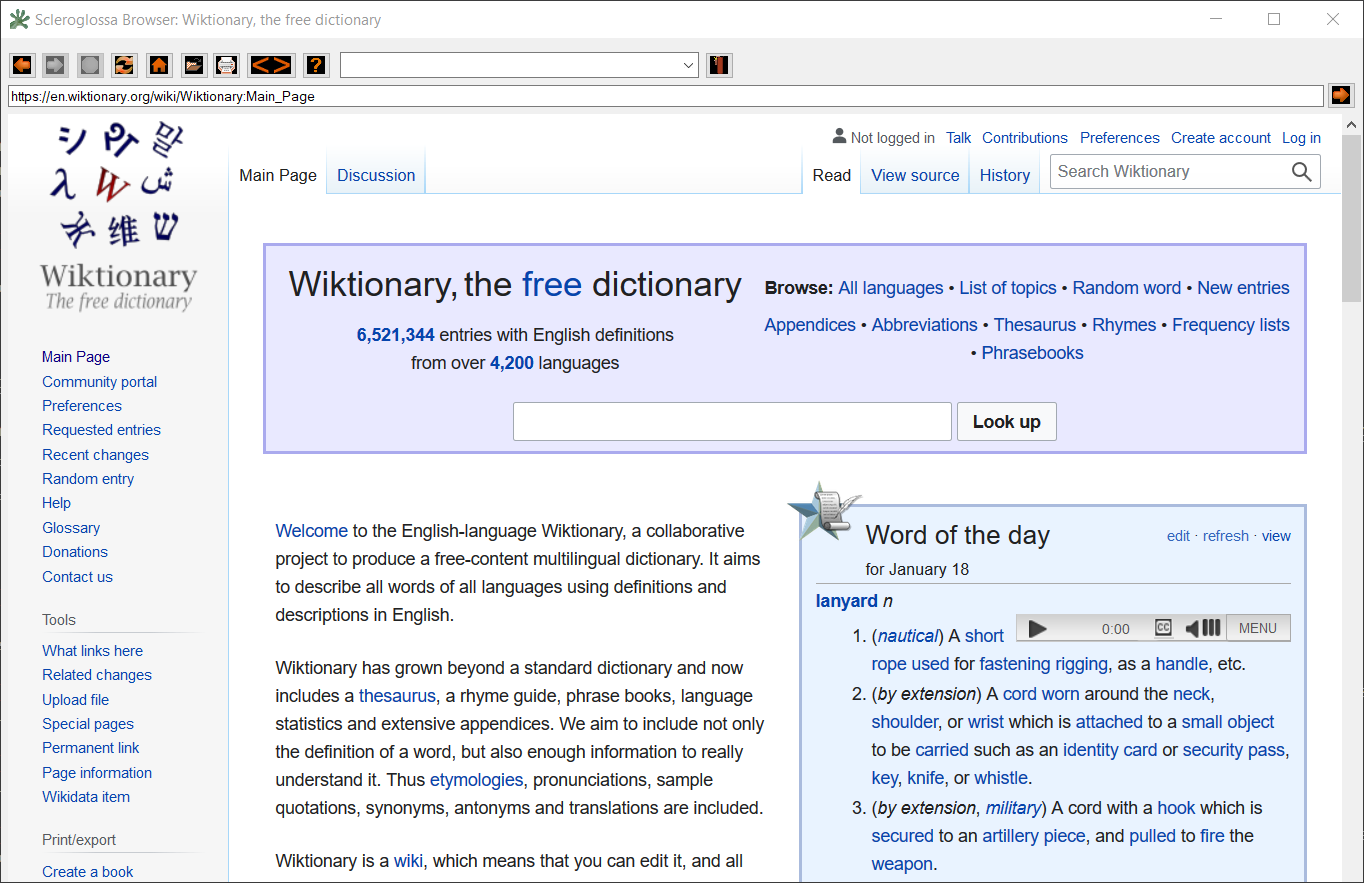

Second, here’s an example of what I saw in the first 24 hours after switching to my own site search:

In my case, I coded something from scratch, but you probably don’t have to. Like I said, there will be options and plugins for whatever you’re using.

Although some of these searches are from me doing testing, I already have some useful information. For example, I had some information on the “triton” synthesizer, but nothing about the “triton extreme”. This was a clue that I should add that to my site (which I did). I would never have realized that I had left that out if I didn’t know what people were looking for. Even better, because I built this custom, I can see what the top search result was for each query. This tells me whether people are getting what they want with a quick glance.

The value of this information is FAR more than the paltry few pennies that you’d get from Google’s custom search, and those pennies come at a cost — directing people away from your site.

In addition, I now have full control of how pages rank on my site. Because I know my site better than Google and have more context about my particular niche, I can provide more relevant prioritization of results.

Hosting your own search is more work overall (although maybe just a few minutes), and you may need to do a bit to filter out bot and crawler traffic, but this is valuable information worth having access to. You shouldn’t just give it away.